GenAI, the youngest of the AI family, is evolving quickly. The global life sciences industry is attempting to evolve with it. However, as a keynote session at DIA’s MASC suggests, taking a pause to understand AI risks and to prepare to comply with the EU AI Act is what is needed.

On March 13, 2024, 27 of the European Union member states passed the EU Artificial Intelligence Act in 2024. Endorsed by 523 members of the European Parliament, it is intended to set broad principles – and requirements – for developers of AI systems. At the same time, Figure just unveiled the first robot able to complete tasks while having conversations powered by GenAI technology from OpenAI. Impressive and yet concerning when we realize how fast technology is moving across various industries.

One of the plenary discussions at DIA’s Medical Affairs and Scientific Communications Forum (MASC) 2024 presented a reminder that while deep learning (2017) and GenAI (2021) are the latest developments in AI, the technology dates back to the 1950s.

In that context, GenAI is still in its infancy. The industry has had more time to adjust to the challenges each preceding AI technological advancement brought. While the emergence and rapid evolution of GenAI may seem daunting, the fundamental principles surrounding the ethical and responsible use of AI remain the same. The global life sciences industry is applying a cautious approach to allowing GenAI near patients —and rightly so, according to Stephen Pyke, chief clinical data and digital officer at Parexel.

GenAI’s Communication Behavior

GenAI, the youngest of the deep learning AI branch, is having a moment. Instances where GenAI has rewritten history or had a 3 AM meltdown have been widely publicized. Whistleblowers are drawing attention to more serious risks associated with GenAI. And while the technology is undergoing continuous scrutiny, development has not paused. GenAI development continues to race forward, and society is witnessing what is going right and wrong at a steady pace.

As many of us have experienced when working with GenAI, it creates fictional content. While that alone is concerning, what is becoming increasingly important to understand is that it portrays the content as if it were truth.

During the plenary session, Leveraging AI Responsibly and Ethically in Life Sciences R&D, Pyke eloquently explained the dangers associated with GenAI’s tendency to fib by contrasting it with human behavior.

When humans are unfamiliar with a topic, they tend to generalize, which creates a vague conversation. This provides social cues that the person may not understand the topic well or have correct facts. Unfortunately, GenAI works in reverse. GenAI overcompensates by providing detailed information that appears factual.

Therefore, a human must scrutinize the information to verify its accuracy, which lends itself to various human biases. And of course, counter to expectations, this reduces efficiency. Pyke stated there is research emerging that investigates whether the use of GenAI truly reduces efficiency and effectiveness in some cases.

The D&B Words

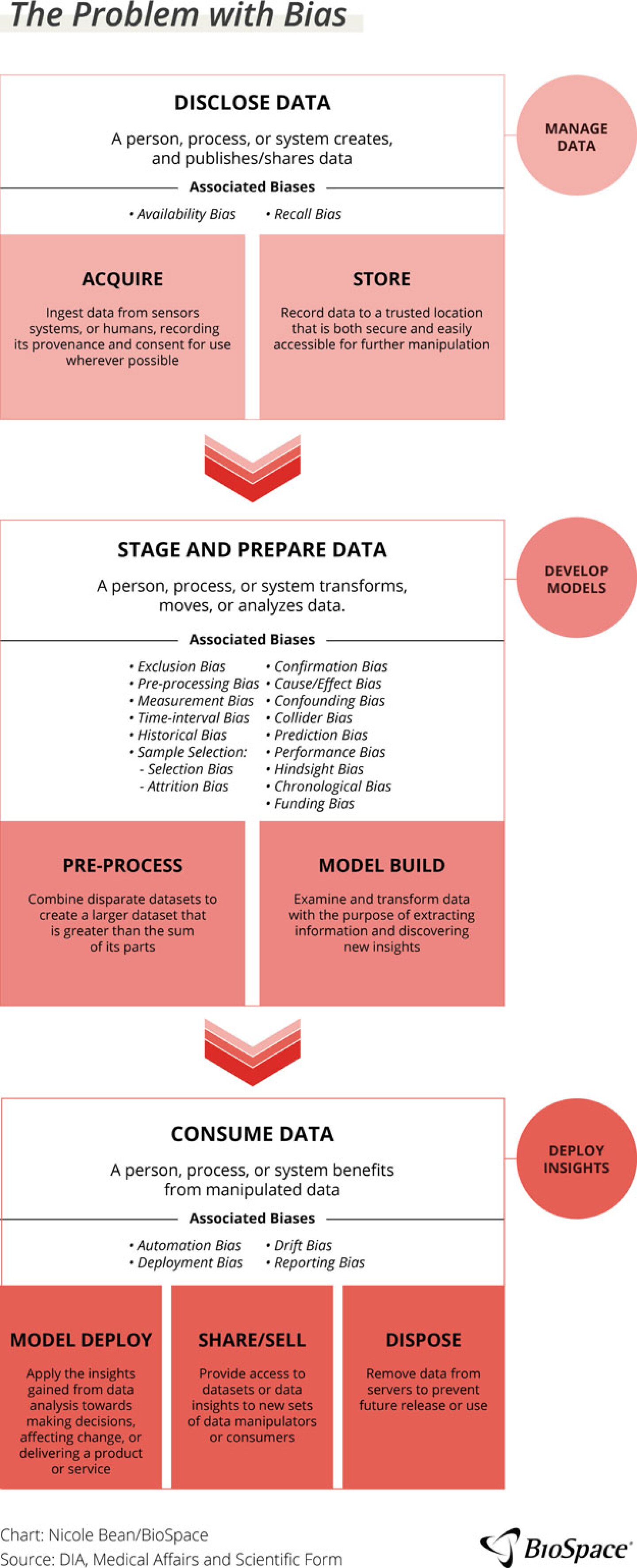

While voices throughout the industry echo “the data conversation” exhaustion, data quality and bias must be discussed. In the same session, Sherrine Eid, MPH, global head of RWE, epidemiology and observational research at the SAS Institute Inc., presented over 20 types of data biases. These biases, she explained, present risks to AI spanning across the entire data chain, from the disclosure of data (acquiring and storing) to the staging and preparing or analysis of the data (prep process and model builds) and consuming the data (model development, deployment of insights). And these are just the ones that the industry is aware of right now.

As Pyke pointed out, the industry is unaware of what data the commercial models have been trained on so it is impossible to understand all of the ways where bias can enter the process or the type of questions that elicit erroneous answers. The way to combat bias is a combination of training and familiarity so the users know what to look for, but also recognizing that AI is a tool. It is not all bad or good in its own right but depends on how the industry approaches the technology.

Eid highlighted that the industry accepts a certain level of bias, even with training. This might be an objective bias — based on reviewers’ experience or training — which is another level of confirmation bias. This type of bias expects certain results and may lead reviewers to accept inaccurate results from AI.

Because AI systems do not work without humans, systemic and human biases must be understood to understand the entire picture. he suggests that the industry measure these margins of error for AI and decide upon what is acceptable. To do so will require open communication between regulators, technology companies and industry peers regarding what is an acceptable level of risk. For the present and immediate future, Eid challenged the industry to do simulation tests to assess the level of bias and the downstream effect to decide if it is acceptable.

We Don’t Know

As mentioned above, the industry is unaware of what data the commercial models have been trained and so there are many questions around bias. Pyke gave an example that ChatGPT’s last training was in 2021 so responses to questions after that time are not relevant.

Eid recommended that the industry reach out to technology providers to address concerns regarding the data and the models as a start. Pyke was less optimistic about the success of such conversations, citing that organizations have significant economic interest vested interests in protecting that information.

Both agreed that the most effective way to combat data bias — whether automation, systemic or human — is increased exposure to AI applications, which appears to be happening organically for society. Training users to be mindful of the intimate nature between the questions input and the responses GenAI provides, as well as the importance of data quality can help mitigate some bias risk. But more importantly, empowering users with the ability to recognize when GenAI is partially or completely correct is imperative as technology continues to evolve. Pyke urged attendees to pause and apply lessons learned from previous AI experiences to GenAI.

As a follow-up to the session, Pyke spoke to BioSpace regarding the EU AI Act implications for the life sciences industry. He noted that it is a significant legal landmark requiring that AI developers comply with EU copyright law which means an obligation to be transparent about the data used in training and potentially pay royalties for its use.

While the life sciences industry is already closely regulated by powerful regulatory agencies, “the Act will take a risk-based approach, where those AI applications most proximal to the patient will be subject to the most stringent demands,” Pyke stated.

He noted that the” clearest guidance yet provided by regulatory agencies on the use of AI in drug development is to seek advice, and to do so early.” According to Pyke, compliance with the new Act is a key area of uncertainty for many developers.

Lori Ellis is the head of insights at BioSpace. She analyzes and comments on industry trends for BioSpace and clients. Her current focus is on the ever-evolving impact of technology on the pharmaceutical industry. You can reach her at lori.ellis@biospace.com. Follow her on LinkedIn.